root@qube:~# parted -s /dev/sdd print Warning: Could not determine physical sector size for /dev/sdd. Using the logical sector size (512). Model: ViPowER VP-89118(SD1) (scsi) Disk /dev/sdd: 250GB Sector size (logical/physical): 512B/512B Partition Table: gpt Number Start End Size File system Name Flags 1 1049kB 1026MB 1024MB ext3 primary 2 1026MB 6146MB 5120MB primary 3 6146MB 6147MB 1049kB primary 4 6147MB 6148MB 1049kB primary 5 6148MB 7172MB 1024MB primary 6 7172MB 242GB 235GB primary root@qube:~#「フォーマット」した時に、エラーにはなったが、 パーティションは切られている。

/boot を見てみる

root@qube:~# mkdir /tmp/boot mkdir: cannot create directory `/tmp/boot': File exists root@qube:~# mount /dev/sdd1 /tmp/boot mount: unknown filesystem type 'linux_raid_member' root@qube:~#あかんやん。学習せん奴

LS-VL につなぐ。

root@vl:~# parted -s /dev/sdb print Model: ViPowER VP-89118(SD1) (scsi) Disk /dev/sdb: 250GB Sector size (logical/physical): 512B/512B Partition Table: gpt Number Start End Size File system Name Flags 1 1049kB 1026MB 1024MB ext3 primary 2 1026MB 6146MB 5120MB primary 3 6146MB 6147MB 1049kB primary 4 6147MB 6148MB 1049kB primary 5 6148MB 7172MB 1024MB primary 6 7172MB 242GB 235GB primary root@vl:~#で、どうやってマウントするんやったっけ?

root@vl:~# cat /proc/mdstat Personalities : [linear] [raid0] [raid1] [raid6] [raid5] [raid4] unused devices:mdadm を使うはずroot@vl:~# mkdir /tmp/boot root@vl:~# mount /dev/sdb1 /tmp/boot/ mount: unknown filesystem type 'linux_raid_member' root@vl:~#

root@vl:~# mdadm --help -su: mdadm: command not found root@vl:~#あれ?この間インストールしたのは、別の HDD ?

root@vl:~# apt-get install mdadm

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following extra packages will be installed:

exim4-base exim4-config exim4-daemon-light libpcre3

Suggested packages:

mail-reader eximon4 exim4-doc-html exim4-doc-info file spf-tools-perl swaks

Recommended packages:

default-mta mail-transport-agent

The following NEW packages will be installed:

exim4-base exim4-config exim4-daemon-light libpcre3 mdadm

0 upgraded, 5 newly installed, 0 to remove and 5 not upgraded.

Need to get 2745 kB of archives.

After this operation, 5685 kB of additional disk space will be used.

Do you want to continue [Y/n]? y

Get:1 http://ftp.jp.debian.org/debian/ squeeze/main exim4-config all 4.72-6+squeeze2 [464 kB]

Get:2 http://ftp.jp.debian.org/debian/ squeeze/main exim4-base armel 4.72-6+squeeze2 [1013 kB]

Get:3 http://ftp.jp.debian.org/debian/ squeeze/main libpcre3 armel 8.02-1.1 [234 kB]

Get:4 http://ftp.jp.debian.org/debian/ squeeze/main exim4-daemon-light armel 4.72-6+squeeze2 [567 kB]

Get:5 http://ftp.jp.debian.org/debian/ squeeze/main mdadm armel 3.1.4-1+8efb9d1+squeeze1 [468 kB]

Fetched 2745 kB in 1s (2617 kB/s)

Preconfiguring packages ...

Package configuration

lqqqqqqqqqqqqqqqqqqqqqqqqqqqu Configuring mdadm tqqqqqqqqqqqqqqqqqqqqqqqqqqqk

x If the system's root file system is located on an MD array (RAID), it x

x needs to be started early during the boot sequence. If it is located on x

x a logical volume (LVM), which is on MD, all constituent arrays need to x

x be started. x

x x

x If you know exactly which arrays are needed to bring up the root file x

x system, and you want to postpone starting all other arrays to a later x

x point in the boot sequence, enter the arrays to start here. x

x Alternatively, enter 'all' to simply start all available arrays. x

x x

x If you do not need or want to start any arrays for the root file system, x

x leave the answer blank (or enter 'none'). This may be the case if you x

x are using kernel autostart or do not need any arrays to boot. x

x x

x Please enter 'all', 'none', or a space-separated list of devices such as x

x 'md0 md1' or 'md/1 md/d0' (the leading '/dev/' can be omitted). x

x x

x MD arrays needed for the root file system: x

x x

x none_____________________________________________________________________ x

x x

x <Ok> x

x x

mqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqj

Package configuration

lqqqqqqqqqqqqqqqqqqqqqqqqqqqu Configuring mdadm tqqqqqqqqqqqqqqqqqqqqqqqqqqqk

x x

x Once the base system has booted, mdadm can start all MD arrays (RAIDs) x

x specified in /etc/mdadm/mdadm.conf which have not yet been started. This x

x is recommended unless multiple device (MD) support is compiled into the x

x kernel and all partitions are marked as belonging to MD arrays, with x

x type 0xfd (as those and only those will be started automatically by the x

x kernel). x

x x

x Do you want to start MD arrays automatically? x

x x

x <Yes> <No> x

x x

mqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqqj

Selecting previously deselected package exim4-config.

(Reading database ... 19845 files and directories currently installed.)

Unpacking exim4-config (from .../exim4-config_4.72-6+squeeze2_all.deb) ...

Selecting previously deselected package exim4-base.

Unpacking exim4-base (from .../exim4-base_4.72-6+squeeze2_armel.deb) ...

Selecting previously deselected package libpcre3.

Unpacking libpcre3 (from .../libpcre3_8.02-1.1_armel.deb) ...

Selecting previously deselected package exim4-daemon-light.

Unpacking exim4-daemon-light (from .../exim4-daemon-light_4.72-6+squeeze2_armel.deb) ...

Selecting previously deselected package mdadm.

Unpacking mdadm (from .../mdadm_3.1.4-1+8efb9d1+squeeze1_armel.deb) ...

Processing triggers for man-db ...

Setting up exim4-config (4.72-6+squeeze2) ...

Adding system-user for exim (v4)

Setting up exim4-base (4.72-6+squeeze2) ...

Setting up libpcre3 (8.02-1.1) ...

Setting up exim4-daemon-light (4.72-6+squeeze2) ...

Starting MTA: exim4.

ALERT: exim paniclog /var/log/exim4/paniclog has non-zero size, mail system possibly broken ... failed!

Setting up mdadm (3.1.4-1+8efb9d1+squeeze1) ...

Generating array device nodes... done.

Generating mdadm.conf... done.

Starting MD monitoring service: mdadm --monitor.

Assembling MD array md0...done (degraded [1/4]).

Assembling MD array md1...done (degraded [1/4]).

Assembling MD array md10...done (degraded [1/4]).

Generating udev events for MD arrays...done.

!root@vl:~# cat /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid6] [raid5] [raid4]

md10 : active raid1 sdb5[4]

1000436 blocks super 1.2 [4/1] [_U__]

md1 : active raid1 sdb2[4]

4999156 blocks super 1.2 [4/1] [_U__]

md0 : active raid1 sdb1[1]

1000384 blocks [4/1] [_U__]

unused devices:

root@vl:~#

マウントする。

root@vl:~# mount /dev/md0 /tmp/boot root@vl:~# ls /tmp/boot/ builddate.txt log.tgz u-boot_lsqvl.bin uImage.buffalo.org initrd.buffalo lost+found uImage.buffalo root@vl:~#ひと通り揃っている。

/ は?

root@vl:~# ls /tmp/root 7 boot etc initrd lost+found proc sbin tmp var bin dev home lib mnt root sys usr www root@vl:~#こちらも。

フォーマット時か、その後の再起動後か、 sync されたようだ。

LS-VL をシャットダウンし、

USB で LS-VL に接続していた HDD をカートリッジにセットし、

LS-QVL のスロット1にセットして起動。

無事、起動し、LED1 は青点灯になった。

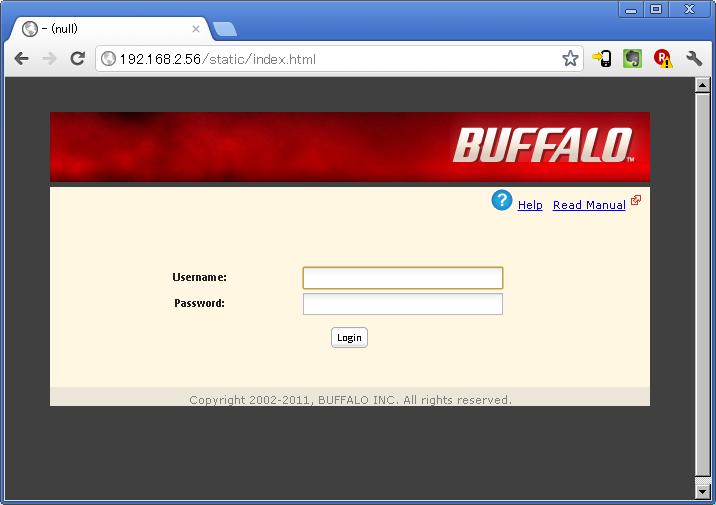

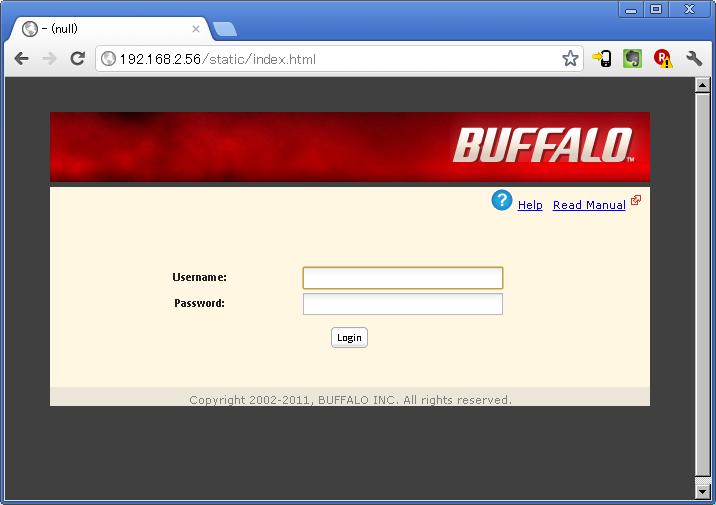

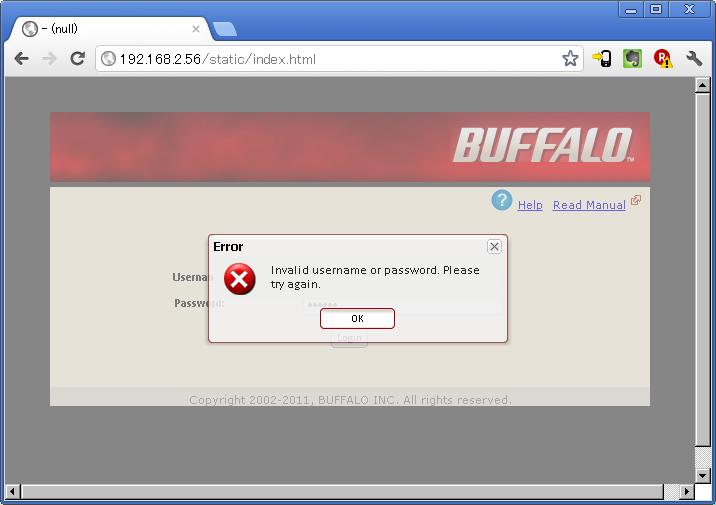

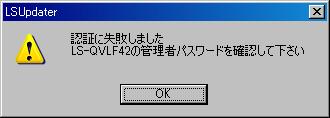

デフォルトのパスワードを入れても

エラーになるばかり。

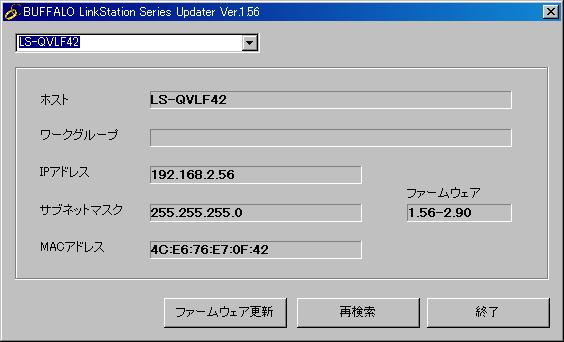

見つかった。これでアップデートすればいけるぞ!

「ファームウェア更新」をクリック、

管理者用パスワードとしてデフォルトのパスワードを入れてみると、、、、

パスワードなしも同じくエラー。

困った。。。

|

|

← 振り出しからやり直し |

ハックの記録 LinkStation/玄箱 をハックしよう |

→ トラブルシュート(2):ファームウェアアップデータ 1.57 の実行 |

Copyright (C) 2003-2012 Yasunari Yamashita. All Rights Reserved.

yasunari @ yamasita.jp 山下康成@京都府向日市